Page Contents

Objective

Explore potential of a low cost marker based motion capture system and develop virtual reality applications using real-time tracking data to provide an interactive VR experience.

Using this motion capture system, we aim to develop innovative and exciting VR applicaions and moreover, convert simple games into interesting ones by using real time entities to control virtual game objects. In order to achieve this, we are going to use the VR wall set up in xLab, UPenn which has 5 IR emitters equipped with 5 grayscale camera sensors, configured in a fashion such that it acts as a marker based motion capture system.

We wil be using OptiTrack Motive to capture tracking data from the motion capture system and our final goal would be to integrate that data with user interfaces such as VRUI, Unity3D and a physics engine like LiquidFun that are built for virtual reality based applications and finally, provide a more immersive experience with Oculus Rift.

Motivation

- Beneficial :-

There are lot of areas where virtual reality can be beneficial, for example, building advanced games, improving educational practices, entertainment, healthcare etc. We plan to explore, if not all, atleast some of them.

- Challenging :-

The field of virtual reality has been evolving recently and very few resources are available for developers. That is why we find it very challenging and we hope to contribute something to the VR community with our applications that would integrate motion tracking with Unity.

- Exciting :-

Developing a virtual reality application is definitely exciting because you get to play with something that is not real. The words "Creating Virtual Reality" should be sufficient enough to understand the excitement behind it.

Project Components

- Virtual Wall setup having 5 grayscale cameras and IR emitters

- Calibration Square & Wand to calibrate the VR wall setup

- Rigid bodies designed using retro-reflective markers

- OptiTrack Motive for collecting and processing data of rigid bodies

- VRUI toolkit to develop the virtual reality application

- LiquidFun physics engine to add special VR effects

- Unity3D cross-platform game developement platform

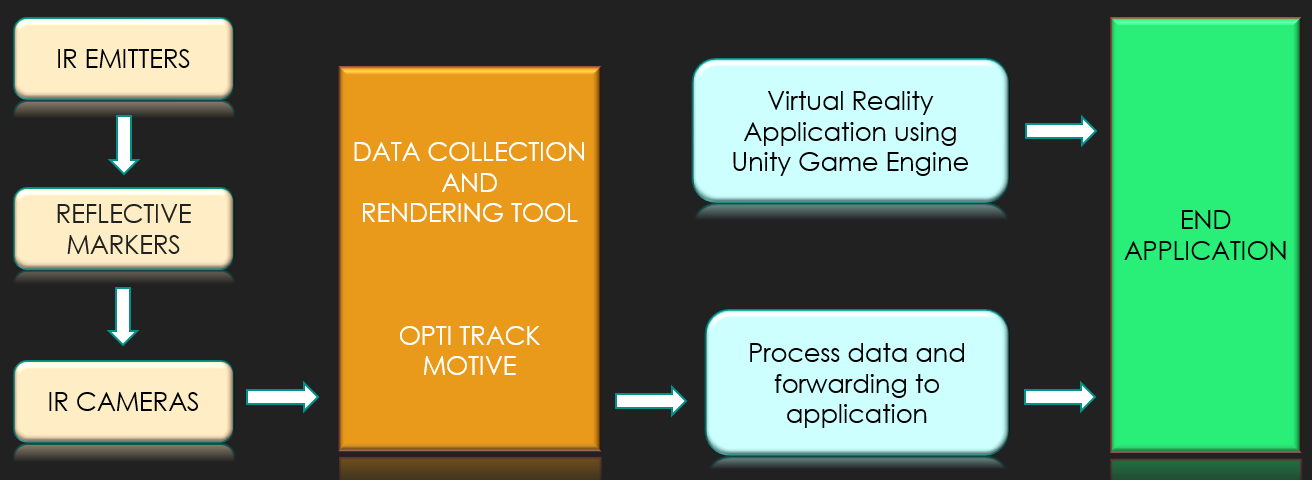

System Design

Videos/Updates

We will be posting our project updates using small video clips along with the description here.

Week-1 Update

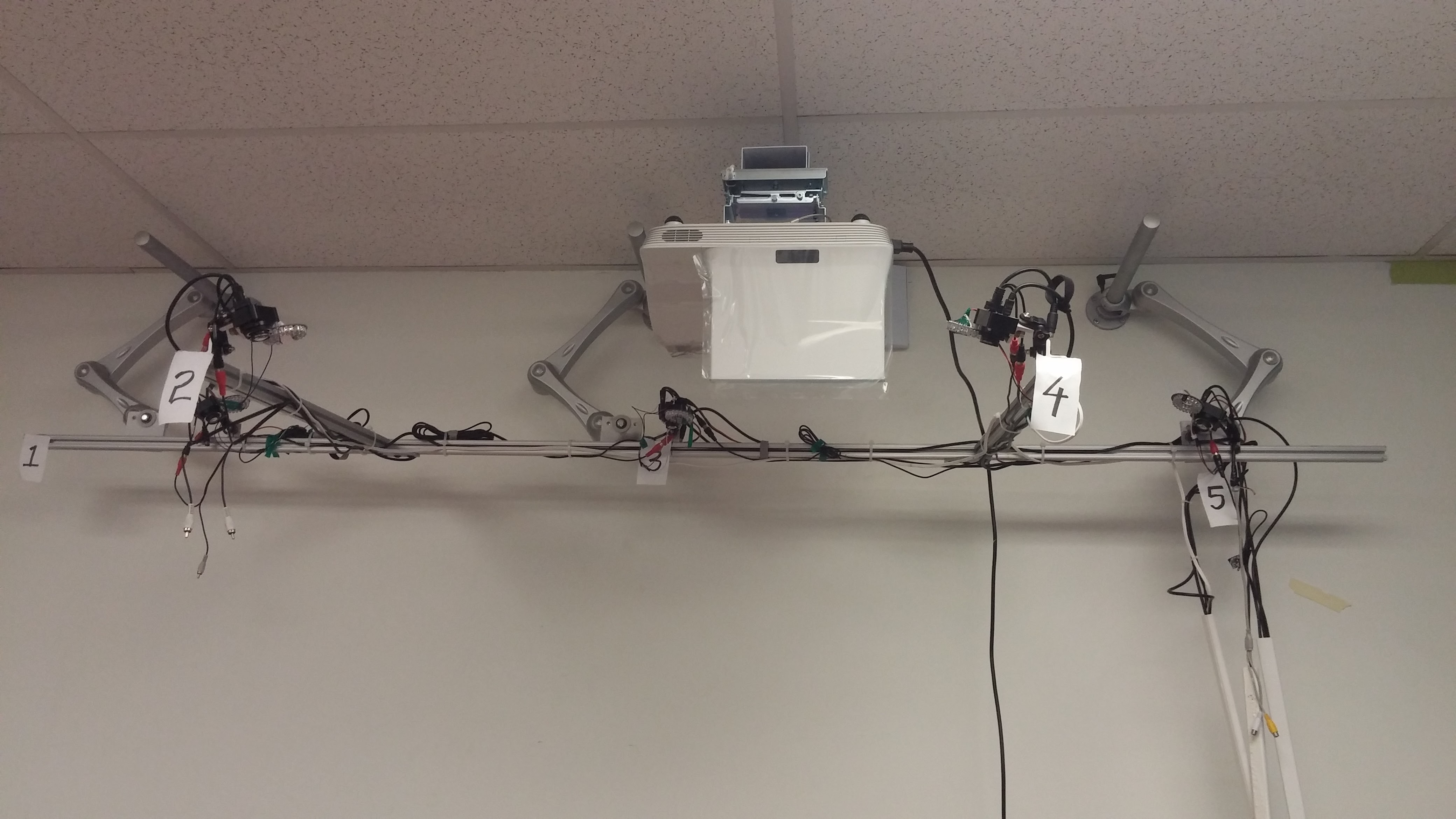

We spent the first week exploring the OptiTrack software and the VRUI toolkit. We also fixed the virtual wall set up which has five grayscale cameras and IR emitters as shown in the figure below. The IR emitters emit IR radiations and the cameras are used to capture the reflected radiations from rigid bodies. Rigid bodies are made using retro-reflective markers as they reflect the IR radiations back to the source with minimum scattering. That is why the camera detector is attached very close to the IR emitters. Moreover, we also created a wand like rigid body using 3 markers and a selfie stick which is also shown below.

OptiTrack Motive is the motion capture system that we are using to collect the position as well as the orientation data of rigid bodies collected from the cameras. Once this data is collected and processed, we will send it to the Virtual Reality User Interface (VRUI) toolkit running on a different machine via VRPN (Virtual Reality Peripheral Network). Using this data and the toolkit, we can develop VR applications in a scalable and portable fashion.

Week-2 Update

The next step was to calibrate the setup such that the rigid bodies are detected as what they should be. We found some good tutorials on how to perform the calibration but we faced some problems with the accurate placement of markers on the rigid body because of which calibration was taking a lot of time which otherwise should finish in less than 10 minutes. After some experimentation and research, we resolved the error by using proper wands built with nuts and bolts. Using the selfie stick as the wand with markers taped on it was not a good idea in the first place, but it was fun. A demonstration of the calibration process is included in the Tutorials section.

Other than calibration, we also installed the VRUI toolkit on the Linux machine and played with some Example Programs. It seems quite interesting and now the next step will be to get the OptiTrack data on the VRUI toolkit.

Week-3 Update

We followed the tutorial included in the VRUI toolkit to synchronize VRUI with OptiTrack via VRPN. We faced a lot of problems while doing this but were able to figure things out with the help of Oliver Kreylos, who developed the VRUI VR toolkit.

Week-4 Update

For VR application development, we shifted from VRUI toolkit to Unity 3D because of a lack of resources with VRUI. We first synchronized OptiTrack Motive and Unity and then developed a basic ping pong game on Unity to control one of the rackets by tracking position of a rigid body. Here is a quick demo:-

Week-5 Update

We created the pong game to observe the precision level of the tracking system and noticed that we can actually create applications with very high tracking accuracy. We thought of a lot of ideas from different areas namely- entertainment, educational and healthcare and then finally, we decided to create a virtual drum simulator. It is like creating virtual drums, where the drummer can play with the drum sticks in air and they won't have to carry their bulky drum kit anymore. We created a basic drum set in Unity and associated sounds with different parts of the drum. After that, we started tracking a rigid body to play the drums. Here is a quick demo:-

Over the Thanksgiving break, our drum sticks arrived and we hooked up the tracking markers on them. Now we can play the drums with both the sticks. Moreover, we also unpacked the Oculus Rift to run the virtual drums application and It looked super awesome :)

Week-6 Update

This week, we spent a lot of time to smoothen the drummming mechanism. We tried different camera settings and tracking algorithms to achieve high level of accuracy with very less delay but were not able to completely resolve this trade off. Thereofer, in the mean time, we changed the color of the drums, replaced the spheres with actual drumsticks and added some collision effects in our virtual drum simulator. Here is a quick demo:-

Week-7 Update

We got a very good feedback from Prof. Rahul Mangharam and other students after the Demo Day 1 (4th December'2015). We discussed the next steps for the final week and decided that we will be working on smoothening the drumming process and exploring how fast a person can drum. Moreover, if time permits, we will also try to calibrate the environment automatically for every new drummer. Following our reach goal of tracking very fast drumming, we completely changed the tracking algorithm and also bought a new optimized drumset from Unity Asset Store. Here is a quick demo of how fast a person can drum using our virtual drum simulator:-

Final Update

We are finally here with the final deliverables of our project.

Tutorials

We have also created tutorials explaining the different parts of the project so that someone in the future can easily pick up from where we left and take this project to a completely different level. You can use this document and the following videos to quickly get started with a similar project. Below is the demonstration of the calibration process of motion tracking system with OptiTrack Motive:-

Other than calibration, we have also created a tutorial of how to use the APIs that we have used or created to stream tracking data from OptiTrack to Unity so that the game objects can be controlled using rigid bodies.